CUDA is a parallel computing platform and programming model that enables dramatic increases in computing performance by harnessing the power of the graphics processing unit (GPU).Since its introduction in 2006, CUDA has been widely deployed through thousands of applications and published research papers, and supported by an installed base of over 300 million CUDA-enabled GPUs in notebooks, workstations, compute clusters and supercomputers. Cuda driver version.

The ‘Available physical memory is low' value in the systemmemorystatedesc column is a sign of external memory pressure that requires further analysis. Resource Governor The Resource Governor in SQL Server 2008 Enterprise edition allows you to fine tune SQL Server memory allocation strategies, but incorrect settings can be a cause for out.

- I've just looked at my system information and discovered the stats below: Installed Physical Memory (RAM) 4.00 GB Total Physical Memory 3.19 GB Available Physical Memory 1.99 GB I'm a complete novice with hardware so can anybody explain why this is? Does this mean Im only running with 2GB of ram?

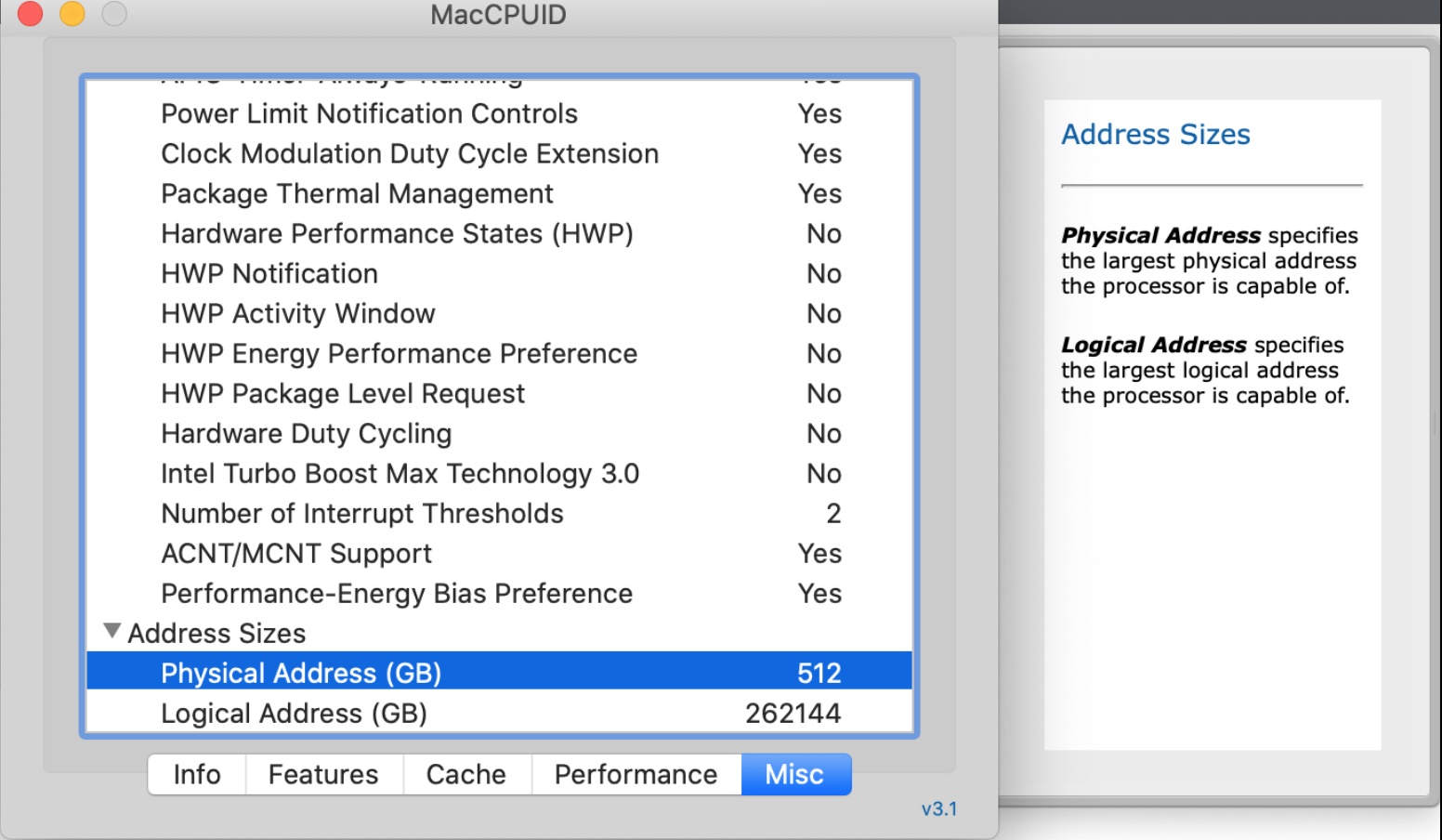

- The default value, zero (0), means the HardMemoryLimit will be set to a midway value between TotalMemoryLimit and the total physical memory of the system; if the physical memory of the system is larger than the virtual address space of the process, then virtual address space will be used instead to calculate HardMemoryLimit.

- 'Total Physical Memory is much more than Available Physical' It could not be otherwise. Total physical memory is the total amount of memory that Windows can use. A portion of this will be in active use by the OS and applications. The remainder is available for future use by any application that needs it and is labeled as 'Available'.

One of the least understood areas of Analysis Services performance seems to be that of memory limits – how they work, and how to configure them effectively. I was going to start with an overview of Performance Advisor for Analysis Services (aka, PA for SSAS), but during the beta I ran across such a good example of the software in action in diagnosing a problem with memory, I think it'll work just as well to go through that issue step-by-step.

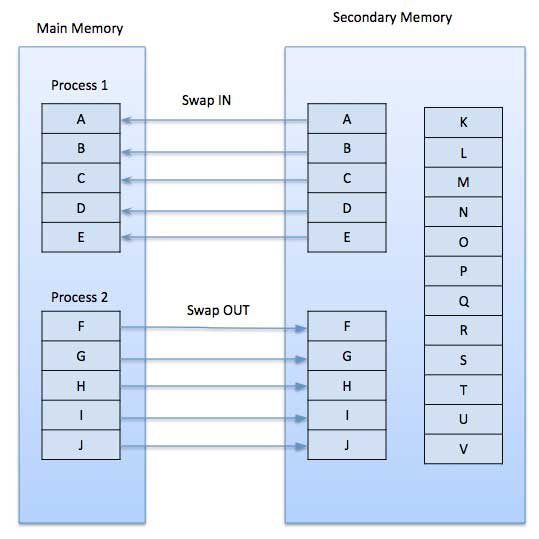

First some background. SSAS has two general categories of memory, shrinkable and nonshrinkable, and they work pretty much like it sounds. Shrinkable memory can be easily reduced and returned back to the OS. Nonshrinkable memory, on the other hand, is generally used for more essential system-related stuff such as memory allocators and metadata objects, and is not easily reduced.

PA for SSAS shows both types on the SSAS Memory Usage chart:

If you switch to 'Memory by Category' mode via right-click menu, you get a more detailed breakdown. Just like the chart above, the memory with hash marks is nonshrinkable:

Available Physical Memory Low

SSAS uses memory limit settings to determine how it allocates and manages its internal memory. MemoryLowMemoryLimit defaults to 65% of the total available physical memory on the machine (75% on AS2005), and MemoryTotalMemoryLimit (also sometimes called the High Memory Limit) defaults to 80%. This is the total amount of memory that the SSAS process itself (msmdsrv.exe) can consume. Note on the charts above, the orange line represents the Low memory limit and the red line the Total memory limit. This makes it very easy to see how close SSAS's actual memory consumption is to these limits. Once memory usage hits the Low limit, memory cleaner threads will kick in and start moving data out of memory in a relatively non-aggressive fashion. If memory hits the Total limit, the cleaner goes into crisis mode… it spawns additional threads and gets much more aggressive about memory cleanup, and this can dramatically impact performance.

Between the Low and Total limits, SSAS uses an economic memory management model to determine which memory to cleanup. This model can be adjusted by some other parameters related to the memory price, but I'm not going to get into those here.

When it comes to memory management SSAS is entirely self-governing, and unlike SQL Server it doesn't consider external low physical memory conditions (which can be signaled by Windows) or low VAS memory. This may be partly because SSAS is already much more subject to Windows own memory management than is SQL Server, since Analysis Services databases are a collection of files on the file system and can make heavy use of the file system cache, whereas SQL Server does not. This means that you can have a significant amount of SSAS data loaded into RAM by virtue of the file cache, and this will not show up as part of the SSAS process memory, or in any way be governed by the SSAS memory limits. (For this reason we now show system file cache usage and file cache hit ratios on the dashboard as well so you can see this.)

On a side note, a byproduct of this design is that if available memory is low and other processes on the server are competing for memory resources, SSAS will pretty much ignore them, and look only to its own memory limit settings to determine what to do. This means, for example, that memory for a SQL Server using the default 'Minimum server memory' setting of 0 can be aggressively and dramatically reduced by an SSAS instance on the same machine with default memory limits, to the point where the SQL Server can become unresponsive. SQL Server is signaled by Windows that memory is low, and responds accordingly by releasing memory back to the OS, which is then gobbled up by SSAS.

Graphical Anomaly, or Serious Memory Problem?

Very early in our beta for PA for SSAS, a beta tester reported what they thought was an odd graphical anomaly in the bottom right of the SSAS Memory Usage chart:

To the untrained eye, this would certainly look like an anomaly, but once you've seen what this chart is supposed to look like, it's fairly obvious that it's an 'H' on top of an 'L'.

The ‘Available physical memory is low' value in the systemmemorystatedesc column is a sign of external memory pressure that requires further analysis. Resource Governor The Resource Governor in SQL Server 2008 Enterprise edition allows you to fine tune SQL Server memory allocation strategies, but incorrect settings can be a cause for out.

- I've just looked at my system information and discovered the stats below: Installed Physical Memory (RAM) 4.00 GB Total Physical Memory 3.19 GB Available Physical Memory 1.99 GB I'm a complete novice with hardware so can anybody explain why this is? Does this mean Im only running with 2GB of ram?

- The default value, zero (0), means the HardMemoryLimit will be set to a midway value between TotalMemoryLimit and the total physical memory of the system; if the physical memory of the system is larger than the virtual address space of the process, then virtual address space will be used instead to calculate HardMemoryLimit.

- 'Total Physical Memory is much more than Available Physical' It could not be otherwise. Total physical memory is the total amount of memory that Windows can use. A portion of this will be in active use by the OS and applications. The remainder is available for future use by any application that needs it and is labeled as 'Available'.

One of the least understood areas of Analysis Services performance seems to be that of memory limits – how they work, and how to configure them effectively. I was going to start with an overview of Performance Advisor for Analysis Services (aka, PA for SSAS), but during the beta I ran across such a good example of the software in action in diagnosing a problem with memory, I think it'll work just as well to go through that issue step-by-step.

First some background. SSAS has two general categories of memory, shrinkable and nonshrinkable, and they work pretty much like it sounds. Shrinkable memory can be easily reduced and returned back to the OS. Nonshrinkable memory, on the other hand, is generally used for more essential system-related stuff such as memory allocators and metadata objects, and is not easily reduced.

PA for SSAS shows both types on the SSAS Memory Usage chart:

If you switch to 'Memory by Category' mode via right-click menu, you get a more detailed breakdown. Just like the chart above, the memory with hash marks is nonshrinkable:

Available Physical Memory Low

SSAS uses memory limit settings to determine how it allocates and manages its internal memory. MemoryLowMemoryLimit defaults to 65% of the total available physical memory on the machine (75% on AS2005), and MemoryTotalMemoryLimit (also sometimes called the High Memory Limit) defaults to 80%. This is the total amount of memory that the SSAS process itself (msmdsrv.exe) can consume. Note on the charts above, the orange line represents the Low memory limit and the red line the Total memory limit. This makes it very easy to see how close SSAS's actual memory consumption is to these limits. Once memory usage hits the Low limit, memory cleaner threads will kick in and start moving data out of memory in a relatively non-aggressive fashion. If memory hits the Total limit, the cleaner goes into crisis mode… it spawns additional threads and gets much more aggressive about memory cleanup, and this can dramatically impact performance.

Between the Low and Total limits, SSAS uses an economic memory management model to determine which memory to cleanup. This model can be adjusted by some other parameters related to the memory price, but I'm not going to get into those here.

When it comes to memory management SSAS is entirely self-governing, and unlike SQL Server it doesn't consider external low physical memory conditions (which can be signaled by Windows) or low VAS memory. This may be partly because SSAS is already much more subject to Windows own memory management than is SQL Server, since Analysis Services databases are a collection of files on the file system and can make heavy use of the file system cache, whereas SQL Server does not. This means that you can have a significant amount of SSAS data loaded into RAM by virtue of the file cache, and this will not show up as part of the SSAS process memory, or in any way be governed by the SSAS memory limits. (For this reason we now show system file cache usage and file cache hit ratios on the dashboard as well so you can see this.)

On a side note, a byproduct of this design is that if available memory is low and other processes on the server are competing for memory resources, SSAS will pretty much ignore them, and look only to its own memory limit settings to determine what to do. This means, for example, that memory for a SQL Server using the default 'Minimum server memory' setting of 0 can be aggressively and dramatically reduced by an SSAS instance on the same machine with default memory limits, to the point where the SQL Server can become unresponsive. SQL Server is signaled by Windows that memory is low, and responds accordingly by releasing memory back to the OS, which is then gobbled up by SSAS.

Graphical Anomaly, or Serious Memory Problem?

Very early in our beta for PA for SSAS, a beta tester reported what they thought was an odd graphical anomaly in the bottom right of the SSAS Memory Usage chart:

To the untrained eye, this would certainly look like an anomaly, but once you've seen what this chart is supposed to look like, it's fairly obvious that it's an 'H' on top of an 'L'.

This particular tester had been eager to get the beta because they had suffered from poor performance and other strange behaviors for quite some time. They had actually already scheduled Microsoft to come in and help troubleshoot the issues.

What exactly did this 'anomaly' mean? What caused it, how it was resolved, and what was the net effect on performance, if any? Typing mastar. All great questions… and this will be the subject of my next post, so stay tuned ;-)

C# Get Total Physical Memory

If anyone has any guesses in the meantime feel free to comment. The first person to get it right will win a free license of Performance Advisor for Analysis Services! (that's a $2495 value)